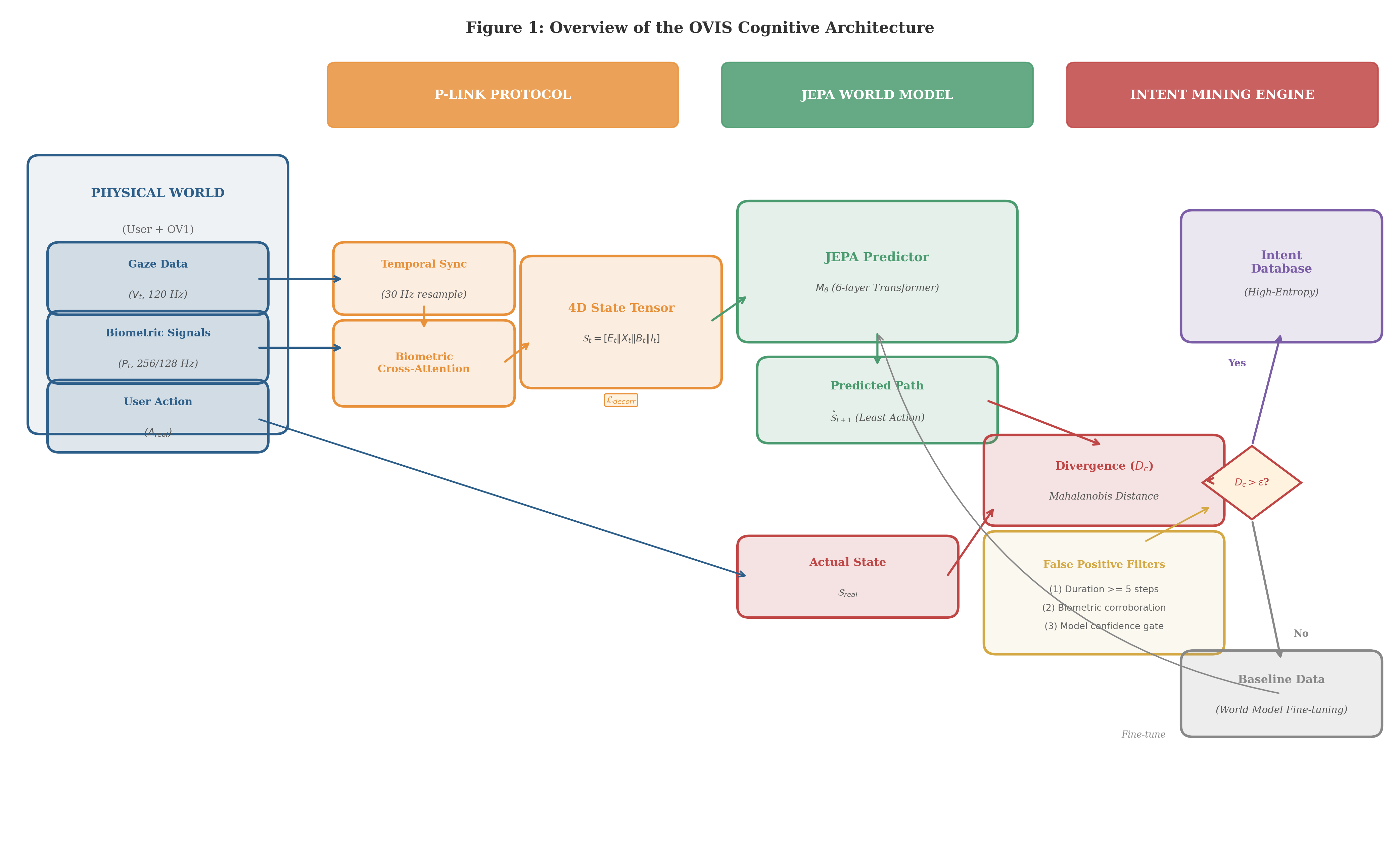

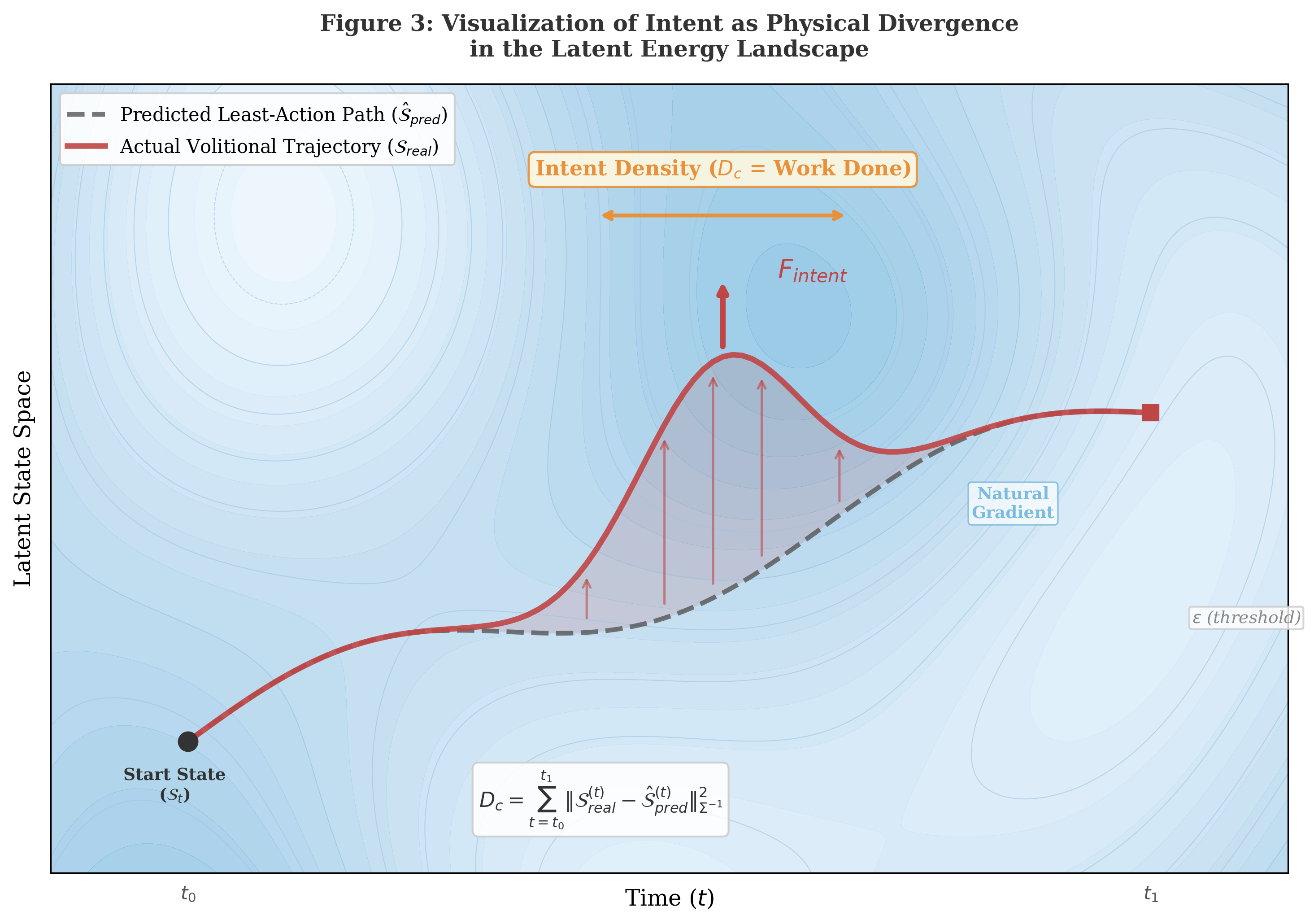

OUNG is building the full stack of embodied AI: the device that senses human intent, the cognitive architecture that formalizes it, and the aligned intelligence that learns from it. Based in Singapore and Seoul.

Founded by Jae-Woong Kim — brand strategist and project leader who led the strategic repositioning of two companies to CES Innovation Awards, including CES 2025 Best of Innovation and CES 2026 Innovation Award. Background spanning brand strategy, business vision, and product design across AI, robotics, and consumer hardware. Now building at the intersection of embodied cognition, AI safety, and consumer technology.